As you probably have heard by now, NRCA has launched its national certification initiative, NRCA ProCertification,™ to build a competent, sustainable, high-performing roofing and waterproofing industry workforce. NRCA ProCertification is designed to:

- Create a career path for roofing industry field workers

- Elevate the roofing industry to be on par with other trade professions that currently offer national certifications

- Address the workforce shortage by making the roofing industry more appealing

- Protect consumers by providing national, professional certifications for those doing work on their homes or buildings

- Educate consumers about the value of hiring NRCA ProCertified roof system installers and foremen

- Increase consumer confidence that workers have the knowledge and skills to do the job well

NRCA uses hands-on performance exams for NRCA ProCertification to certify installers and Qualified Assessors, the people who evaluate installers’ work. Because NRCA wants its exams to measure the skills important to the integrity of roof system installations, hands-on performance exams make sense and are designed especially for tasks requiring a combination of mental and physical skills. Hands-on performance exams also are an effective way to test people’s ability to follow safety regulations.

NRCA strives to comply with accepted testing practices. For installation, the challenge is creating valid yet feasible tests for current and emerging roof systems. For online testing, the challenge is complying with standards that were developed for multiple-choice knowledge tests.

Face validity is the degree to which a test appears to be effective in terms of its stated aims. Tests that use multiple-choice questions have low face validity unless the work requires people to read information and then select actions from a menu of choices. NRCA is paving new testing ground while raising standards for those wanting to upskill their workforces.

Why care about testing standards?

The American National Standards Institute, an authorized arm of the International Standards Organization, publishes standards for organizations that certify people in specific jobs. The National Commission on Credentialing Agencies also has standards for certifications. And the International Society for Performance Improvement develops standards for programs designed to improve the performance of a workforce. All three groups require a job study to identify and confirm the skills and knowledge required for roofing work. The job study also proves the tests used to assess knowledge and skills are valid and reliable. Validity proves a test measures what it claims to measure and every version of the test is equivalent. Reliability proves a test measures the same skills in the same way over time.

Striving to meet these standards means NRCA can apply for accreditation after NRCA ProCertification is in place for at least two years. Earning an accreditation requires the program to be reviewed by an independent third party that attests the certification meets professional standards. Accreditation adds credibility, and NRCA is complying with all three groups’ standards.

NRCA ProCertification’s journey

The previous year can be looked at as a journey for NRCA ProCertification, with a few side trips along the way as NRCA staff and members tried out ideas and learned what does and doesn’t work. As a result, NRCA ProCertification has high face validity compared with traditional tests that use multiple-choice questions. Proving installers can complete tasks and answer questions about their work brings value to the industry, employers, installers and consumers.

Why hands-on exams?

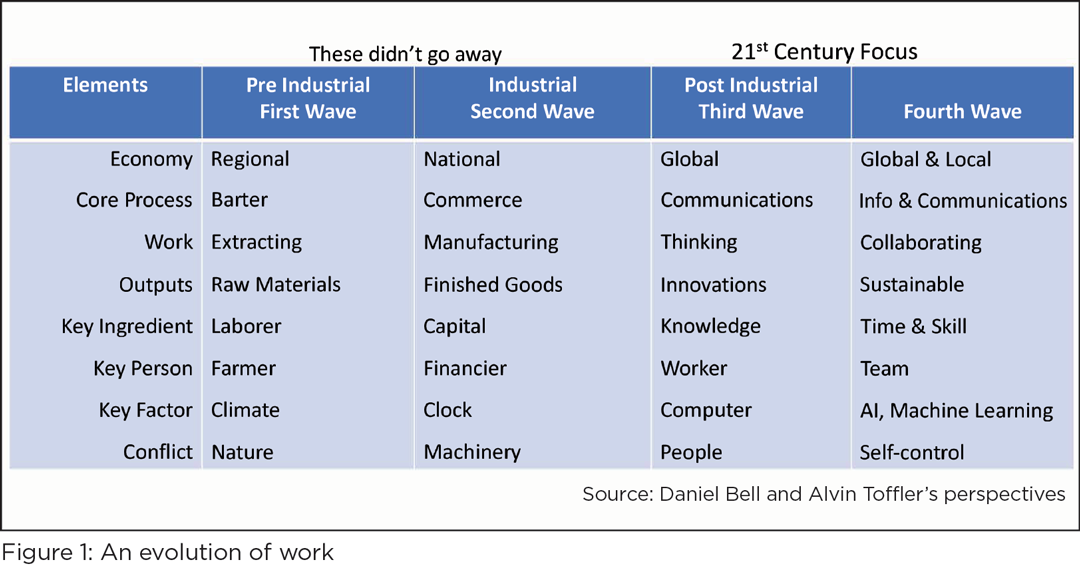

In the past, trades relied on apprenticeships to train their workers. Apprentices learned by doing. Demonstrating workers could complete tasks to standard was automatically built into the training. During the past century, schools shifted their focus to knowledge work, shop classes were dropped and computer classes were added. With these changes, apprenticeships were devalued, and the trades were left behind. White-collar office work became the sought-after jobs and though skilled trades are still needed, few foresaw the need to recruit people to the trades. Figure 1 provides an overview of the evolution of work.

As a majority of roofing work now is learned on-the-job from employers, accredited hands-on performance tests through NRCA ProCertification will provide standards for the industry. Hands-on performance tests measure people’s ability to complete tasks under conditions that reflect the workplace. The best way to judge people’s capability is to watch them complete tasks. This would mean someone being on the roof all the time watching installers work and not correcting them if they made a mistake (a testing rule) unless the error was unsafe.

An alternative is to examine work once it is done. In the roofing world, this would mean checking each task once it is completed. However, neither method is practical. So similar to crane operators and cement kiln operators, NRCA is doing the next best thing—using simulations where installers complete a series of tasks under controlled conditions. The military, medical, airlines, utilities and manufacturing industries also use simulations because employers want to be confident industry workers can complete tasks correctly yet not put people or property at risk when a mistake is made.

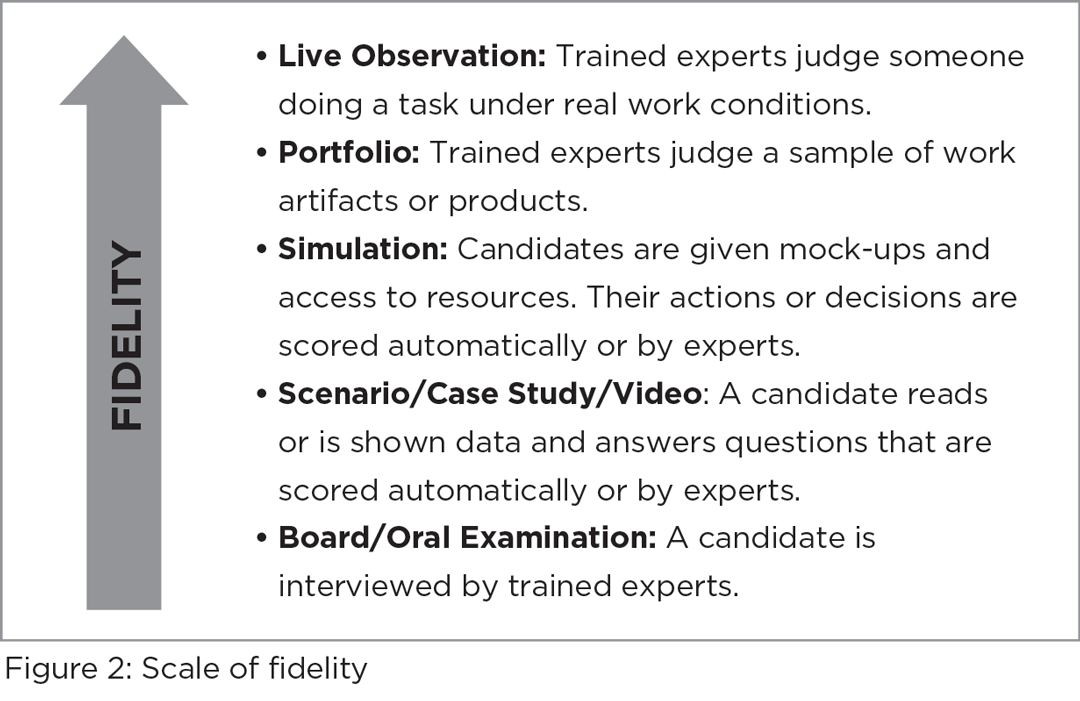

One way to think about hands-on performance tests is to use a scale of fidelity (see Figure 2). At the top of the scale is watching people do work in real time on the job. At the bottom of the scale is asking people to explain what and how they complete tasks. Simulations fall in the middle. When you ask people to explain what and how they do something, you are measuring their ability to explain, not do. Talking well is not the same as doing well.

The challenges

Creating hands-on performance tests sounds simple until you try to build them. For example, everyone must agree on what to test. During the past year, NRCA reconsidered what should be included in its tests. Initially, the plan was to test every installer on every task and every method. But this proved impractical and unnecessary.

For example, the test for installing asphalt shingles includes shingling a valley. There are at least three recognized ways of shingling valleys. The challenge is this: Should installers be required to demonstrate they can shingle valleys all three ways even if their jobs require them to only know one way, or does NRCA allow installers to pick one way and then offer a version of the test for that method? After some experimenting, NRCA decided when there are multiple accepted ways of completing a task such as shingling a valley, installers can ask to be tested on a specific method.

Equivalency

Another challenge posed by the testing standards is equivalency. Equivalency requires duplicating the testing environment—same weather conditions, same tools, same access to light and more—for every person tested. Equivalency also is key to proving reliability. NRCA must prove each test, whenever and wherever it is offered, is equivalent. This means the mock-ups must be the same, tasks are the same, physical conditions are the same, scoring rules are the same and so forth.

When NRCA created blueprints for mock-ups, the goal was to ensure mock-ups were similar enough that installers would be asked to complete the same tasks under similar conditions. This made sense until it was discovered contractors already have mock-ups to train their installers. Therefore, asking contractors to rebuild their mock-ups to match the blueprint was not feasible.

Instead, NRCA now asks that mock-ups include the same elements, such as a valley, corner, pipe, etc. The blueprints still are available to trade schools and contractors who want to host the tests but do not have mock-ups already built.

Qualifying the judges

When a test is hands-on, testing rules require the people who will judge the work to be qualified. NRCA ProCertification Qualified Assessors also are part of ensuring equivalency. This includes training the assessors and testing their ability to be fair and accurate. Qualified Assessors cannot be too easy or too difficult in their evaluations. They must give all installers the same amount of time and the same instructions.

NRCA must prove its assessors are not biased and administer the tests as agreed. NRCA accomplishes this by training its assessors and then testing how accurately and consistently assessors judge people’s work and tracking assessor scores to see whether there are any patterns, such as always passing or failing people on a part of the test. In the testing world, this is called interrater reliability.

From the beginning, NRCA wanted to use simulations to test Qualified Assessors. The simulations require potential assessors to watch 12 short videos ranging from one to three minutes in length. Each video shows an installer completing a discrete task, such as trimming a corner, installing underlayment, nailing, checking safety equipment, applying sealants, etc. Each video contains a common mistake. Candidates get one chance to spot the mistake because, similar to real life, they cannot replay the action.

Efficiency

The next challenge was finding out how many installers one Qualified Assessor could observe at one time. Initially, NRCA planned for one assessor to test one installer at a time. But after a few experiments, it became clear assessors could observe four installers at a time if the conditions were right. However, the mock-ups must be close together and without visual barriers so the assessors always can see each installer’s actions. Installers also must have enough space to move around their mock-ups to do the work. But this created another challenge to equivalency—adequate space—because contractors and distributors that host the tests have limited space. Therefore, companies can host up to four installers for testing only if they have enough space.

Creating a scoring tool

From the start, NRCA began experimenting with a tool for scoring each installer’s performance. The goal was to have a handheld tablet that would calculate scores automatically. But before investing in the creation of an electronic scoring tool, NRCA wanted to be sure the method worked.

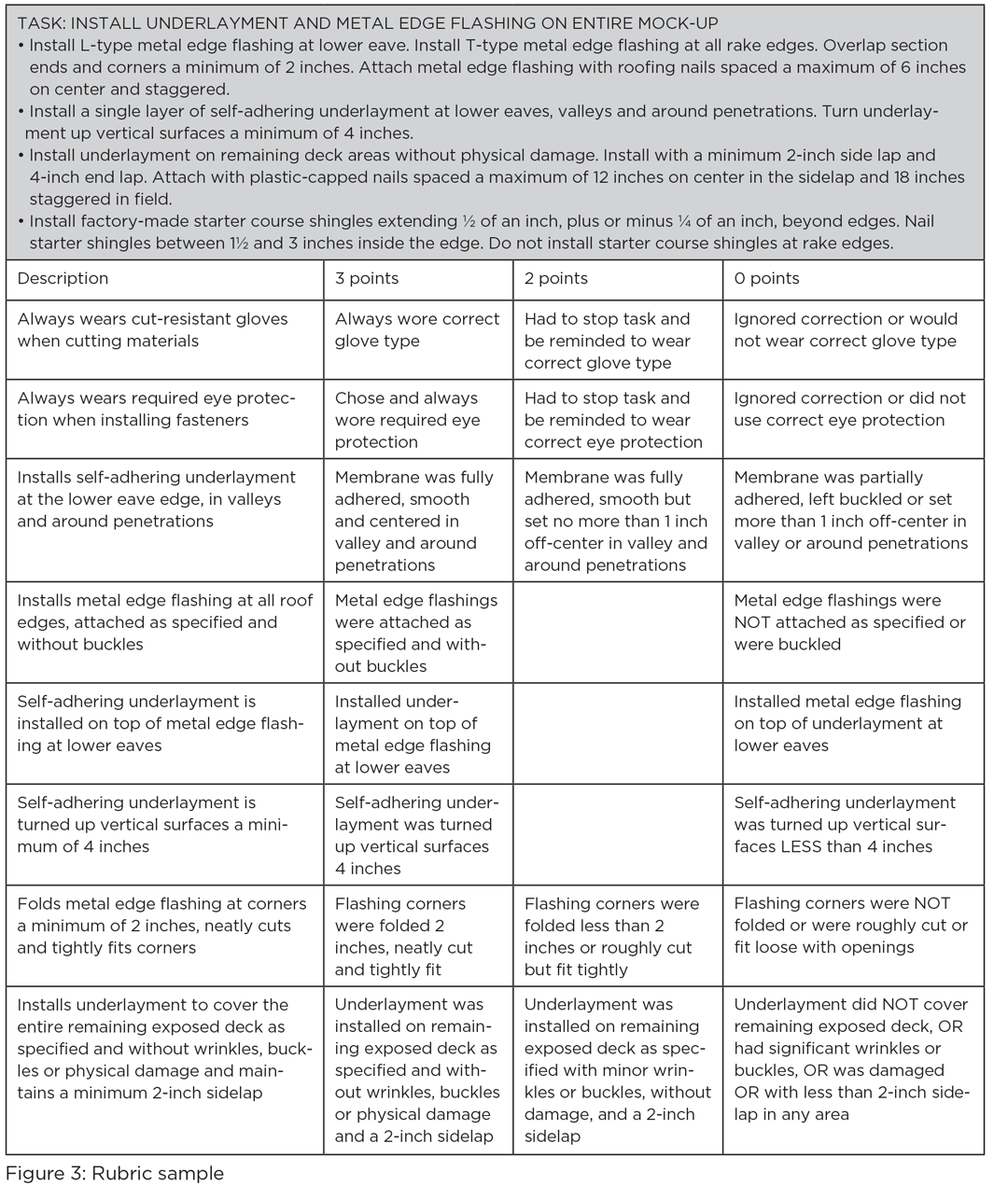

The scoring tool is called a rubric (see Figure 3). A rubric looks similar to a chart. When reading from the top down on the left side, behaviors or steps required of each task are listed—an example would be installing underlayment. Across the top is a three-point scale: three points for doing the step correctly, two points for making minor errors and zero points if the step is done incorrectly. Under each point value are descriptions of what qualifies for that point level. To refine the tool, every time a test was given, assessors were asked for their feedback about how to make the tool easier to use.

NRCA soon discovered the tool, as designed, presented a challenge. A three-point scale made it possible to pass the test even if mistakes meant the roof would leak with the first rain.

What is good enough?

To improve its scoring tool, NRCA asked a group of manufacturers and contractors to rank the steps for each task in terms of its criticality to the integrity of the roof system installation. The group used a five-point scale with five meaning the most critical. The average ranking for most steps was above four, meaning each step was considered critical.

Next, NRCA looked at those steps that averaged 4.75 and higher. These were the steps that when not done or done incorrectly caused a roof system to quickly fail. Under the original scoring method, not doing a step or doing it incorrectly only meant installers were not given any points. With the criticality ratings, installers now lose points when they fail to do a step or do it incorrectly. The number of points an installer loses depends on the criticality of the step. At a minimum, installers lose three points.

But the new scoring method presented yet another challenge. Now, installers can fail just part of the test, such as installing underlayment or applying sealants. NRCA currently is deciding whether installers who fail a part of the test should be required to retake the whole test or just the part they failed.

Every organization that offers a certification wants its tests to be valid and reliable. Yet, according to the American Psychological Association, the experts on validity and reliability, there is no perfect test. The best test to strive for is close approximation and what is feasible.

Embracing the challenge

Hands-on performance tests bring challenges, such as selecting tasks that require the use of skills and knowledge you want to assess, creating simulations that test those skills and knowledge, setting up equivalent testing conditions so the test can be replicated, and training people to judge the work of others in ways that are fair. NRCA continues to embrace these challenges and share what it learns with other organizations wanting to use hands-on performance tests.

Judith Hale, Ph.D., is owner of Hale Associates, Downers Grove, Ill.